Lab 15: Web Scraping

🎯 Lab ObjectiveIn this lab you will learn to scrape data from websites.

- robots.txt contains website scraping policy.

- A web scraper a program that extracts data from websites.

- A web crawler a web scraper that also performs link discovery.

Table of Contents

Web scraping is the process of extracting data from websites.

✅ TipSome people call web scrapingdata mining. But data mining is a much broader term that includes many other techniques.

Web scraping should be conducted ethically and legally, respecting website terms of service and intellectual copyright. If you are not careful you could get your IP banned, or face legal action. OpenAI is being sued for scraping the training data for ChatGPT without consent.

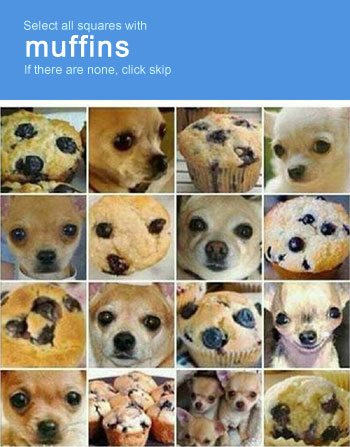

Nevertheless, web scraping is a legal practice and can be a powerful tool in many situations. Websites that really dislike their data being scraped will prevent access with paywalls, logins, or human verification systems like CAPTCHA.

You can check a websites scraping policy by looking at their robots.txt file. For example, https://www.facebook.com/robots.txt prohibits almost all web scraping, and so does https://trademe.co.nz/robots.txt

📝 Task 1: robots.txtRead the

robots.txtfor a website.

- Choose a website

- Take their domain name e.g.

www.example.com- Add

/robots.txtto the end.- Visit the URL in your browser.

Is your chosen website friendly to web scrapers?

We are going to use Selenium, a popular tool for web scraping. It can render JavaScript-heavy sites, supports multiple browsers, and can emulate user behavior. However, for simpler tasks or static websites, alternatives like BeautifulSoup or Requests may be more efficient.

The website we we will scrape data from is Zyte’s Web Scraping Sandbox: www.toscrape.com.

You may use either Python or Java for this lab.

📝 Task 2: Selenium SetupSelect either🐍 Pythonor☕️ Javabelow and follow the steps to get Selenium running.

✅ TipPython has a more simple syntax imho, but the choice is yours.

- On the lab machines run this script:

K:\setup\enable-python.ps1- Right click > Run with PowerShell

- Install the Selenium package:

pip install selenium - Make sure the below code works.

- it should first open a browser

- visit Google, search for “COSC203”

- wait 5 seconds, then close the browser.

from selenium import webdriver

from selenium.webdriver.common.by import By

from time import sleep

driver = webdriver.Firefox()

# driver = webdriver.Chrome()

# visit a URL

driver.get("http://www.google.com/")

sleep(1)

# find the search box, type in a query, then submit

search_box = driver.find_element(By.NAME, "q")

search_box.send_keys("COSC203")

search_box.submit()

sleep(5)

# close the browser

driver.quit()

- If working on the lab machines, run this script first:

K:\setup\enable-java.ps1- Right click > Run with PowerShell

- Download this repo

- https://altitude.otago.ac.nz/cosc203/code/web-scraper

- 📁 Open this project/folder with Visual Studio Code

- ⬇️ Required extensions

- Java Extension Pack

- Maven for Java

- ▶️ Click Run and Debug (Ctrl + F5)

package cosc203;

// import

import java.util.Scanner;

import org.openqa.selenium.*;

import org.openqa.selenium.firefox.FirefoxDriver;

import org.openqa.selenium.safari.SafariDriver;

public class App {

public static void main(String[] args) throws InterruptedException {

WebDriver driver = new FirefoxDriver();

// WebDriver driver = new SafariDriver();

// visit a URL

driver.get("http://www.google.com/");

Thread.sleep(1000);

// find the search box, type in a query, then submit

WebElement searchBox = driver.findElement(By.name("q"));

searchBox.sendKeys("COSC203");

searchBox.submit();

System.out.println("\n\nPress Enter to close browser.");

new Scanner(System.in).nextLine();

driver.close();

}

}

Java code troubleshooting tips.

- In a terminal run

java --versionjavac --version- Update

pom.xmlto match your Java version

- probably

17or18.<maven.compiler.source>18</maven.compiler.source> <maven.compiler.target>18</maven.compiler.target>We found Firefox to be the most reliable, but you can use either Chrome or Safari.

- Open Safari

- Safari > Preferences > Advanced > Show Develop menu in menu bar

- Develop > Allow Remote Automation

- Update

App.javato use Safariimport org.openqa.selenium.safari.*; WebDriver driver = new SafariDriver();

- Update

App.javato use Chromeimport org.openqa.selenium.chrome.*; WebDriver driver = new ChromeDriver();

- If you get an error about

chromedrivernot being found, you need to download it.

- Google Chrome > Help > About > Find Version number

- Download chromedriver

- Version 115 or older

- Version 116

- Place the files in your project directory

System.setProperty("webdriver.chrome.driver", "path/to/chromedriver.exe"); WebDriver driver = new ChromeDriver();

We’ll start with the “quotes” website.

📝 Task 3: View Page Source

- In a browser visit:

- Inspect page source

- Right Click > View Page Source

- Find these elements in the HTML:

<div class="quote"><span class="text"><small class="author"><a href="/page/2/">Next →</a>

Let’s start scraping!

📝 Task 4: Scraping QuotesStart with the provided example code

change the url to https://quotes.toscrape.com/

retrieve a list of all quote elements:

🐍 Python

quotes = driver.find_elements(By.CLASS_NAME, "quote")☕️ Java

List<WebElement> quotes = driver.findElements(By.className("quote"));

- Print the number of quotes found.

- It should be 10

The find element(…) methods returns a single WebElement, and find elements(…) returns a list of WebElements.

Both accept a By selector as an argument, which identifies which element(s) to find.

| 🐍 Python | ☕️ Java | Description |

|---|---|---|

By.ID | By.id(String) | Matches elements by the id attribute. |

By.CLASS_NAME | By.className(String) | Matches elements by the class attribute. |

By.TAG_NAME | By.tagName(String) | Matches elements by the tag name (e.g., div, a, input). |

By.LINK_TEXT | By.linkText(String) | Matches anchor elements (<a>) by the exact text. |

By.PARTIAL_LINK_TEXT | By.partialLinkText(String) | Matches anchor elements by partial text. |

By.CSS_SELECTOR | By.cssSelector(String) | Matches elements using CSS selectors. |

Once you have a WebElement you can interact with it.

🐍 Python

element = driver.find_element(By.ID, "x")

text = element.text

☕️ Java

WebElement element = driver.findElement(By.id("x"));

String text = element.getText();

🐍 Python

element.click()

☕️ Java

element.click();

🐍 Python

element.send_keys('some_text')

☕️ Java

element.send_keys("some_text");

Now, lets scrape multiple pages.

📝 Task 5: Clicking LinksThe link we want to click is the “Next →” link at the bottom of the page. It’s an

<a>tag with the text “Next →”. So we can use theBy.LINK_TEXTselector.

- Find and click the “Next →” link

🐍 Python

next_button = driver.find_elements(By.LINK_TEXT, "Next →") if len(next_button) > 0: next_button[0].click()☕️ Java

List<WebElement> nextButton = driver.findElements(By.linkText("Next →")); if (nextButton.size() > 0) { nextButton.get(0).click(); }

- Wrap your code a loop to visit all the pages.

- The last page doesn’t have a “Next →” link

- so end the loop when you can’t find the link.

- Each iteration of the loop should find 10 quotes.

Extract the data from each quote.

📝 Task 6: Extracting DataThe quote element has three child elements: text, author, and tags. We can find these elements easily by calling

find_elementagain on the parent element.

- The following code snippets will print the text of each quote.

🐍 Python

for q in quotes: child_element = q.find_element(By.CLASS_NAME, "text") print(child_element.text)☕️ Java☕️ Java

```java for (WebElement q : quotes) { WebElement text = q.findElement(By.className("text")); System.out.println(text.getText()); }

- Print the author of each quote.

- Count the total number of quotes.

- Only print quotes by “Mark Twain”

Debugging can be tricky, but it’s much easier if you can print the raw HTML of WebElements.

🐍 Python

html = element.get_attribute("outerHTML")

print(html)

☕️ Java

String html = element.getAttribute("outerHTML");

System.out.println(html);

You can also use .get_attribute(...) to get the other attributes… like all the class names.

🐍 Python

classes = elements.get_attribute("class")

print(classes)

☕️ Java

String classes =elements.getAttribute("class");

System.out.println(classes);

There is also another web scraping sandbox which simulates an online bookstore https://books.toscrape.com/

📝 Task 7: Scraping BooksHere is the link: https://books.toscrape.com/

We want to answer the below questions. But scraping all the pages takes several minutes. So we will scrape the data once, save it to a JSON file, and then answer the questions from the JSON file.

- How many books are there?

- Which book is cheapest?

- Which book is most expensive?

- Which books are rated 5-stars?

The JSON file might look something like:

[ { "title": "A Light in the Attic", "price": "£51.77", "rating": "Three stars", "availability": "In stock" }, { "title": "Tipping the Velvet", "price": "£53.74", "rating": "One star", "availability": "In stock" }, ... ]If adapting your previous code, you’ll need to make some changes.

- The text of the “Next →” link to “next” (all lowercase, no arrow).

Below is how you might save the data as JSON.

🐍 Python

Python supports JSON natively.

import json book_data = [] # empty list # create and add a book to the list book = {} book["title"] = "A Light in the Attic" book["price"] = "£51.77" book_data.append(book) # save to file with open("books.json", "w") as f: json.dump(book_data, f)☕️ Java

Java does not support JSON natively, so we need to use a library. We will use simple-json.

// imports import org.json.simple.JSONArray; import org.json.simple.JSONObject; import java.io.FileWriter; import java.io.IOException; // create JSON array JSONArray bookData = new JSONArray(); // add JSON object to the array JSONObject book = new JSONObject(); book.put("title", "A Light in the Attic"); book.put("price", "£51.77"); bookData.add(book); // save to file try (FileWriter file = new FileWriter("books.json")) { file.write(bookData.toJSONString()); } catch (IOException e) { e.printStackTrace(); }The dependencies for

simple-jsonshould already be included in thepom.xmlfile. If not, add the following to the<dependencies>section:<dependency> <groupId>com.googlecode.json-simple</groupId> <artifactId>json-simple</artifactId> <version>1.1.1</version> </dependency>Good Luck!

This lab is worth marks. be sure to get signed off.